Rafael Yuste is Professor of Biological Sciences at Columbia University. A neuroscientist and science advocate, he led the researchers who spearheaded the U.S. BRAIN Initiative and the International BRAIN Initiative. You may follow him on Twitter @yusterafa.

Rafael Yuste is Professor of Biological Sciences at Columbia University. A neuroscientist and science advocate, he led the researchers who spearheaded the U.S. BRAIN Initiative and the International BRAIN Initiative. You may follow him on Twitter @yusterafa.

Jared Genser is an international human rights lawyer, Managing Director of Perseus Strategies, and an Adjunct Professor of Law at Georgetown University Law Center. You may follow him on Twitter @jaredgenser.

Stephanie Herrmann is a legal extern with Perseus Strategies and J.D. candidate at American University Washington College of Law, expected to graduate in May 2021. You can follow her on Twitter @SRHerrm.

SINCE its adoption in 1948 by the United Nations General Assembly, the Universal Declaration of Human Rights has served as a moral beacon over the post-World War II world. The Universal Declaration has been both inspiration and aspiration, providing a common set of values and ethical guidelines for governments, corporations, and individuals. It has inspired the widespread adoption, for example, of the International Covenant on Civil and Political Rights (ICCPR), a multilateral treaty adopted by 173 countries and now covering more than 90 percent of the world’s population. And it has led to more focused treaties addressing torture, disappearances, racial discrimination, and the rights of women, children, and people with disabilities. It has spoken principle to power in over 500 languages and is the most widely-translated document in the world.

At the same time, the human rights landscape has evolved enormously since the Universal Declaration was adopted; our present world threatens human rights violations that its framers could not have foreseen. Technological advancements are redefining human life and are transforming the role of humans in society. In particular, neurotechnology—or methods to record, interpret, or alter brain activity—has the potential to profoundly alter what it means to be human. The brain is not just another organ, but the one that generates all of our mental and cognitive activity. All of our thoughts, perceptions, imagination, memories, decisions, and emotions are generated by the orchestrated firing of neural circuits in our brains. For the first time in history, we are facing the real possibility of human thoughts being decoded or manipulated using technology. Although neurotechnology presents critical opportunities for scientific and medical breakthroughs, and it will open a vast new field for economic development, it also presents unprecedented human rights implications.

Neurotechnology has tremendous potential to improve the human condition and advance our species but, precisely because it can be so transformative, it also raises fundamental human rights challenges that were never envisioned by today’s international human rights treaties. Consequently, existing treaties cannot offer the robust and comprehensive human rights protection that a neurotechnological world requires. Instead, today’s era calls for a novel protection framework: neuro-rights.

Neurotechnology Today

Neurotechnology is making possible what was previously science fiction. Companies and governments are developing devices that would allow people to communicate by thinking, to decipher others’ thoughts by reading their brain data, and to have access to all of the internet’s databases and capabilities inside their minds. Additionally, scientists around the world are developing neurotechnology that could lead to new therapies for mental illness and neurological diseases, such as Alzheimer’s, schizophrenia, stroke, post-traumatic stress disorder, depression, or addiction. The many forms of neurotechnology have led to endless possibilities for shaping daily life. To appreciate the human rights impact of neurotechnology, however, it is important to understand how it works.

At the heart of neurotechnology are brain-computer interfaces (“BCIs”)—the devices which connect a person’s brain to a computer or to another device outside the human body like a smartphone or a computer. BCIs allow a bidirectional communication between the brain and the outside world, exporting brain data or altering brain activity, and they can operate in two different ways. They can be either invasive (and be inside a person’s skull) or non-invasive (like a helmet worn over their head). Both types of neurotechnology bring to light specific gaps in regulation which, in turn, give rise to gaps in human rights protection.

Some BCIs are invasive and require surgery to place electrodes directly into a person’s brain. The electrodes send brain data to a computer, where it can be analyzed and decoded. Invasive BCIs have been used in mainstream medicine for years; some familiar examples of invasive BCIs are cochlear implants, or the deep brain stimulators which can help people with Parkinson’s disease regain mobility. Scientists have also shown how invasive BCIs can help people with missing or damaged limbs to feel heat and cold through their prostheses. For example, implanted with a BCI developed by BrainGate, a person with Amyotrophic Lateral Sclerosis (ALS) who previously could not speak or move now can write and send emails, Google random questions, and shop on Amazon using an off-the-shelf Android tablet. The opening kick of Brazil’s 2018 Soccer World Cup was given by a tetraplegic person wearing a robotic exoskeleton controlled by a BCI. It is expected that in coming years, BCIs will even be able to provide effective visual prostheses for blind persons, which would enhance their ability to sense proximity in the world around them.

Although there have been many remarkable applications in medicine, invasive BCIs can be used in other ways. In 2018, the MIT Media Lab used an invasive BCI to transcribe human thoughts into typed messages. And Neuralink, owned by Elon Musk, announced it is developing a wireless implantable chip to link human minds to computers to create “superhuman” cognition by enhancing humans with AI. Scientists have already discovered how to use invasive BCIs to control the actions of laboratory animals, including mice. While a mouse is performing an action, such as eating, the BCI records its brain data. Scientists can then use this data to reactivate and stimulate the same parts of the mouse’s brain that were previously recorded and cause the mouse to eat again—even if the mouse did not want to eat. This same process has already been used for the artificial implantation of memories or images into a mouse’s brain, generating hallucinations and false memory of fear that, importantly, are indistinguishable from the real world.

By contrast, a non-invasive BCI does not touch the brain; instead, it rests on a person’s head. “Wearable” BCIs, such as helmets, glasses, and diadems, can be used to predict a person’s intended speech or movement. These devices could also help people with expressive or communicative conditions to communicate by decoding the images in a person’s mind. Indeed, scientists have successfully shared images and words between two people in different rooms using non-invasive BCIs, effectively allowing the two to exchange thoughts. But non invasive BCIs could do much more. They already have enabled a man who is quadriplegic to drive a Formula One race car.

Besides using BCIs to decode neuronal activity, coupled with similar methods to the one described above—for recording and stimulating the brain—BCIs can be used to effectively control animals’ movement. In addition to reading and analyzing it, non-invasive BCIs may one day be used to alter human brain activity. What can be done with mice today could be done with humans tomorrow.

As is clear from these examples, applications of neurotechnology are replete with possible human rights violations. As often happens with new technologies, the development of neurotechnology has vastly outpaced countries’ and international organizations’ attempts to regulate it. Invasive BCIs require surgery and are currently regulated under the domain of medicine—but non-invasive BCIs, which will be used for the same purposes as invasive ones, often fall outside of medical regulations. In most countries, non-invasive BCIs are considered consumer items, and—to the extent they are regulated at all—may be classified under pre-existing frameworks that are inadequate to address the unique challenges posed by this new technology.

From Laboratories to Industry

A neurotechnology revolution has been spearheaded by government bodies in the United States, China, and other countries; they are likely also developing non-medical neurotechnology for military and surveillance uses that are not fully explored or regulated by either national laws or international treaties. Sparked by U.S. President Barack Obama’s 2013 BRAIN Initiative, which funded public research for developing neurotechnology and artificial intelligence, countries around the world have begun to heavily fund similar research projects. And, in parallel with progress in scientific laboratories and in governments, neurotechnology development is increasingly happening in the industry, to the point that, in the U.S., the private sector is now outpacing federal funding in developing new neurotechnology.

Indeed, in the past 20 years, over $19 billion globally has been invested in more than 200 neurotechnology companies. For example, Facebook’s “Brain to Text” project, which started in 2017, is building a non-invasive BCI to decode human thoughts at a rate of 100 words per minute and write them on a computer screen. In 2019, Facebook acquired CTRL-Labs for reportedly $1 billion, because it has developed a wristband that may be the first consumer product to use neural activity to translate intentions, gestures, and motions into computer control or movements of a robotic avatar. The startup Kernel released their “KernelFlow” device in the fall of 2020: a helmet which can map brain activity with unprecedented accuracy and resolution. Many other portable non-invasive BCIs are being developed to produce images of brain activity. Given the great progress in decoding brain activity using functional magnetic resonance (fMRI) scanners—whereby researchers can decipher with increasing accuracy images that one freely conjures in the mind—it is only a matter of time until the output of portable brain scanners can be systematically decoded.

As companies and governments continue to invest in and develop neurotechnology, one can reasonably conclude that unexplored ethical and legal dilemmas will continue to arise. In the absence of an international regulatory framework, these dilemmas will inevitably result in human rights violations.

Neurotechnology and Human Rights

Given the pace of progress and the profound consequences that neurotechnology has for the human experience, the current era will likely be remembered as the time that neurotechnology rose to prominence and the international community embraced unprecedented opportunities for public-private partnerships, innovation, and medical advancement. At the same time, the pace of neurotechnology innovation has underscored the need for guardrails, in the form of principles and policies, technology safeguards, and national and international regulations to protect human rights.

The challenge of the coming years will be to create such guardrails that predispose good outcomes when neurotechnology matures and pervades multiple sectors. To build this new system, it is essential to understand the ethical concerns that neurotechnology raises.

Neurotechnology raises unique ethical concerns, because, unlike predecessor technologies, it directly interacts with and affects the brain. Media reports in recent years have uncovered only some of the ways in which neurotechnology has been used around the world that arguably infringes upon human rights. For instance, reports have shown footage of Chinese primary schools which require students to wear headsets to record their concentration levels. This brain data is stored on the teacher’s computer and is later shared with parents without the child’s consent.

Because the brain stores sensitive information and learned tasks, neurotechnology may make this information dangerously accessible in the near future. Hypothetical scenarios that previously seemed outlandish are conceivable today. For example, brain decoding of images in response to questions could be used for effective interrogation of prisoners or even of kidnapped leaders, potentially creating a national security crisis. Alternatively, what if a hiring algorithm discriminated against a prospective employee at a company because it misinterpreted her brain data? Algorithms are capable of developing biases that mimic human ones, such as race or gender. Each of these scenarios highlights a different ethical quandary posed by neurotechnology, which can be intentionally or accidentally abused by its users.

As neurotechnology will likely expand beyond medicine and into sectors including education, gaming, entertainment, transportation, law, research, and the military, it is critical to ensure its ethical application and accessibility. There is some overlap between the ethical concerns associated with neurotechnology and those associated with other biological and computational technologies, such as genomics and artificial intelligence. Some of these overlapping ethical concerns include data security, transparency, fairness, and well-being. However, neurotechnology uniquely addresses two novel ethical challenges which are not presented by other forms of technology: mental privacy and human agency.

Private Thoughts & Free Will

These two ethical issue areas shine a spotlight on the protection gaps in existing international human rights treaties and underscore the need for new human rights to be created. Mental privacy refers to the presumption that the contents of a person’s mind are only known to that person. In the age of neurotechnology, the presumption of mental privacy is no longer a certainty.

Most brain data generated by the body’s nervous system is unconsciously created and outside a person’s control. Therefore, it is plausible that a person would unknowingly or unintentionally reveal brain data while under surveillance. Nevertheless, the concept of mental privacy is not contemplated within Article 17 of the ICCPR, which prohibits unlawful or arbitrary interferences with privacy. The General Comment—that is, the interpretation of Article 17—not only fails to mention technology, but it also fails to discuss the privacy of a person’s thoughts.

Human agency refers to a person’s free will and bodily autonomy. Because neurotechnology can be used to stimulate a person’s brain, it has the capacity to influence a person’s behavior, thoughts, emotions, or memories. While there are numerous mentions across existing international human rights treaties of freedom of thought and freedom from coercion to adopt particular beliefs, it is unclear whether these provisions envisioned possible coercion through technology. For example, Article 18(1) of the ICCPR protects the universal right to freedom of thought, conscience, and religion. Article 18(2) says that a person shall not be subjected to coercion which impairs his ability to adopt a belief of his choosing. Nonetheless, the General Comment of Article 18 makes no mention of technological means.

While the existing system for international human rights protection could partially cover the human rights issues that neurotechnology raises, such as with the broad definitions provided in the Convention Against Torture and Other Cruel, Inhuman, or Degrading Treatment or Punishment, it is incomplete and imprecise and not adapted to the future. It is crucial to both conceptualize the human rights violations that could be conceivably caused by the use or abuse of neurotechnology to protect individual autonomy and mental privacy, and to promote its safe, transparent, and effective use.

Closing the Protection Gap

To close protection gaps under the existing international human rights system and to protect people from the unique concerns associated with neurotechnology, researchers and bioethicists have proposed a new international legal and human rights framework—the so-called neuro-rights—which can be understood as a new set of human rights to protect the brain.

Proposed neuro-rights include (1) the right to identity, or the ability to control both one’s physical and mental integrity; (2) the right to agency, or the freedom of thought and free will to choose one’s own actions; (3) the right to mental privacy, or the ability to keep thoughts protected against disclosure; (4) the right to fair access to mental augmentation, or the ability to ensure that the benefits of improvements to sensory and mental capacity through neurotechnology are distributed justly in the population; and (5) the right to protection from algorithmic bias, or the ability to ensure that technologies do not insert prejudices.

These ethical areas build upon and expand existing international human rights for the protection of human dignity, liberty and security of the person, non-discrimination, equal protection, and privacy. However, these are very generic terms, often subject to interpretation, and the ramifications of neurotechnology require specificity. Furthermore, a comprehensive framework does not yet exist to address the wider scope and range of possible neuro-rights violations.

Currently, there is no international consensus on what constitutes neuro-rights. Chile is the only country with a proposed law and constitutional amendment mandating neuroprotection and explicitly protecting neuro-rights. Both have been approved by the Chilean Senate. In addition, the Spanish Digital Rights Charter—recently announced by the Secretary of State of Digitalization and AI from the Government of Spain—represents another pioneering effort to explore the human rights landscape of the digital era and incorporates the five proposed neuro-rights enumerated above.

Moreover, existing international instruments which address neuro-ethics or technology are still not nascent. The Organization for Economic Cooperation and Development’s Recommendation on Responsible Innovation in Neurotechnology is one of the few examples in which an international organization has considered neurotechnology. While these frameworks discuss safety, consent, and privacy issues associated with neurotechnology, they fall short of addressing the dangers of identity abuse, unfair access, bias and discrimination, state responsibilities and duties, or additional human rights which may be infringed through neurotechnology.

A Neuro-Rights Agenda for the UN

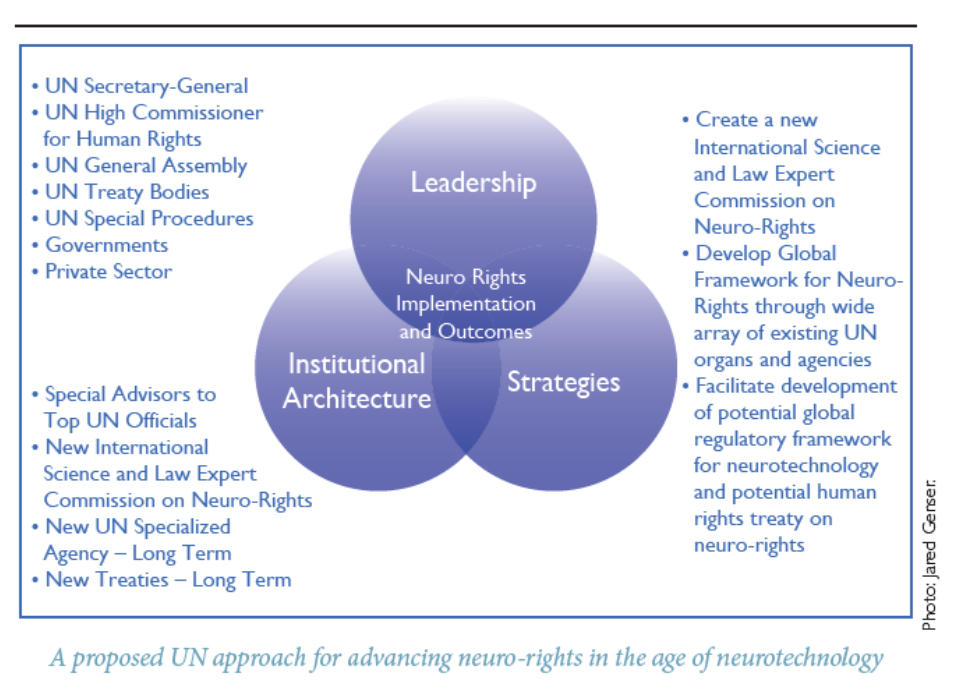

Delivering neuro-rights to the world will require bold leadership, new institutional architecture, and focused strategies. Due to the caliber of the problem, the fact that it affects the entire world, and its direct impact on the work of the United Nations to promote and protect human rights, we think that the UN is the logical forum in which to properly address it. While progress is never immediate, the UN could divide its actions into both short- and long-term solutions to continuously generate momentum for protecting neuro-rights.

What follows are three short-term and four long-term potential measures which could be taken to diminish the risk of the widespread adoption of neurotechnology in the absence of any ethical or regulatory guard-rails.

Short-term measures could help build a consensus definition of neuro-rights and thereby consolidate neurotechnology research and regulatory practices. First, UN Secretary-General António Guterres and UN High Commissioner for Human Rights Michelle Bachelet should, in consultation with the treaty bodies and special procedures, create an International Science and Law Expert Commission on Neuro-Rights. The Commission should comprise both lawyers with international human rights law expertise alongside scientists with neuroscience and neuro-ethics expertise. The Commission could draw its members from academia, the private sector, and from non-governmental organizations. This Commission would specifically aim to develop an international consensus definition of neuro-rights through the exchange of scientific knowledge and the application and development of human rights law.

Second, both these UN officials could appoint highly-qualified experts to serve as Special Advisors on Neuro-Rights. In this capacity, these advisors should identify the best regulatory practices in countries around the world, investigate alleged misuses of neurotechnology, and remain apprised of the latest scientific research. These advisers would also collaborate with the treaty bodies and special procedures to facilitate the long-term development of a framework for protecting neuro-rights, such as a potential international regulatory framework for neurotechnology and a potential new human rights treaty on neuro-rights.

Third, both the neuro-rights advisers and the Commission could hold regular consultations with key countries which have advanced neurotechnology or artificial intelligence research programs, including the United States, the UK, Canada, Australia, Russia, China, Japan, South Korea, and applicable EU member states,as well as countries with existing neuroprotection regulation, such as Chile and Spain.. The advisers and the Commission should encourage these countries to be in frequent dialogue outside of the UN, as well, when possible.

Long-term measures could develop both a framework for the protection and promotion of neuro-rights and a mechanism for monitoring countries’ activities on neurotechnology.

First, the UN General Assembly, the UN Human Rights Council, and other relevant bodies could either create a new treaty or propose a protocol of additions to existing treaties to incorporate neuro-rights. This measure will ensure that there are specific treaty bodies capable of further defining neuro-rights under international law.

Second, the UN Human Rights Council and its special procedures should encourage existing treaty bodies, such as the UN Committee Against Torture and the Human Rights Committee, to adopt General Comments on neuro-rights. These General Comments may interpret provisions in existing treaties as applying to neurotechnology, or they may interpret the scope of individual neuro-rights.

Third, the UN Human Rights Council could appoint a Special Rapporteur on the Impact of Neurotechnology on Human Rights. The Special Rapporteur would travel to specific countries, monitor their progress or violations of neuro-rights, and publish reports of their findings.

Fourth, the UN should consider the creation of a specialized agency to coordinate global neuro-rights activities and to help codify neuro-rights into an international human rights treaty.

The Way Forward

The technological challenges facing the world today are wholly unprecedented. The rapid development of neurotechnology is occurring in a vacuum of regulation in nearly every country and international organization. Even though sovereign states will ultimately create their own laws to address neurotechnology, as this technology affects the human mind, this is an issue that squarely impinges on human rights. Therefore, the United Nations should forge a path for states by setting global standards for the protection of neuro-rights.

When considering the diverse challenges neurotechnology poses for humanity, many may feel daunted by the number of ways in which neurotechnology can infringe upon human rights. However, effective multilateral cooperation can cause the law to both evolve and serve all countries in a technologically shifting world. Although it has never been modified, the Universal Declaration of Human Rights proclaims that the “advent of a world in which human beings shall enjoy freedom of speech and belief and freedom from fear and want has been proclaimed as the highest aspiration of the common people.” The advent of neurotechnology—with transformative yet unsettling consequences—is upon us; and the law must evolve to promote a world where technological advancements do not endanger the rights that the international community has long fought to protect.

Although many human rights instruments and treaty bodies already exist, they never envisioned the world in which we live today. The United Nations cannot afford not to take action in the face of this profoundly transformative technology. It must act with urgency to bolster human rights protection through the incorporation of neuro-rights into the human rights protection system. While it can be a challenging endeavor, it will enable people around the world to harness neurotechnology’s full potential.